Understanding the Risks of Deploying Artificial Intelligence: Key Insights from Arthur D. Little

As the digital landscape in the Middle East rapidly evolves, the challenges and essential safeguards needed for the ethical and effective integration of AI technologies in businesses are becoming increasingly critical.

The accelerated development and adoption of AI, particularly generative AI models like ChatGPT have brought significant benefits. However, the potential risks associated with these technologies cannot be overlooked. There is a pressing need for businesses to adopt proactive risk management strategies to address these risks effectively.

Generative AI Biases and Ethical Standards

Generative AI models are known to perpetuate biases inherent in their training data. These biases can reinforce stereotypes and underrepresent minority views across various dimensions, including:

- Temporal Biases: AI models may generate content that reflects outdated trends and viewpoints.

- Linguistic Biases: Predominantly English training data can lead to poor performance in other languages.

- Confirmation Biases: AI models may confirm their parametric memory even when faced with contradictory evidence.

- Demographic Biases: Biases towards specific genders, races, or social groups, such as generating images of flight attendants predominantly as white women.

- Cultural Biases: AI models can exacerbate existing cultural prejudices.

- Ideological and Political Biases: AI can propagate specific political and ideological views from its training data.

Hallucinations and Model Limitations

AI models occasionally produce false information, known as hallucinations, which can include:

- Knowledge-based Hallucinations: Incorrect factual information.

- Arithmetic Hallucinations: Incorrect calculations.

For example, Bard, an AI chatbot by Google, generated erroneous accusations about consulting firms in November 2023, illustrating the variability in hallucination rates among models.

Deepfakes and Cybersecurity Threats

The sophistication of AI technology has escalated the risks associated with deepfakes and cybersecurity. The ease of creating deepfakes and manipulating opinions with AI-generated content poses significant threats to societal stability. The surge in deepfake incidents and the enhanced credibility of phishing attacks due to AI highlight the urgent need for robust safeguards.

Proactive Risk Management

Businesses in the Middle East must adopt a proactive approach to AI risk management. Key recommendations include:

- Understanding Strategic Stakes: Identifying specific challenges and strategic stakes of AI implementation.

- Conducting Risk Assessments: Integrating thorough risk assessments as part of the initial opportunity landscape.

- Establishing AI Ethics Codes: Implementing clear AI ethics codes and cross-checking AI outputs.

- Upskilling Workforce: Training employees and leaders to understand and manage AI technologies.

- Addressing Trust and Cultural Issues: Facilitating smooth AI adoption by addressing employee trust and cultural issues.

Expert Insights

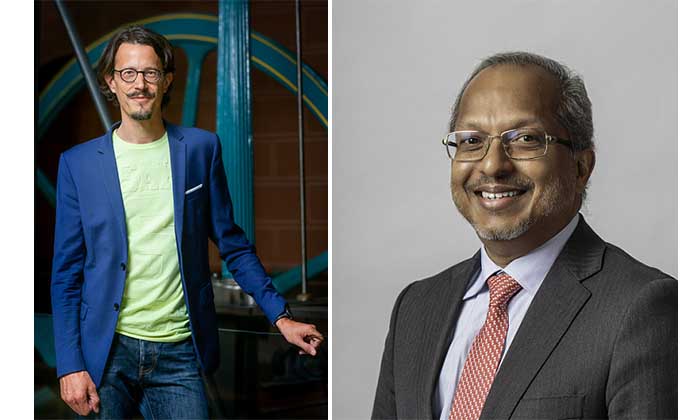

Dr. Albert Meige, Associate Director of the Technology & Innovation Management Practice at Arthur D. Little, emphasizes the importance of vigilance in AI deployment: “Generative AI holds immense potential, but we must be vigilant about its risks. It’s crucial that businesses in the Middle East adopt comprehensive risk management strategies to ensure that AI integration is both ethical and effective.”

Thomas Kuruvilla, Managing Partner at Arthur D. Little, Middle East, adds, “The Middle East is at the forefront of AI innovation, and with this leadership comes the responsibility to navigate the complexities of AI safely. Businesses need to harness AI’s power while mitigating its risks.”

Conclusion

Navigating the complexities of AI integration is essential for businesses aiming to enhance productivity and innovation without compromising ethical standards and public trust. By adopting recommended guidelines, businesses can effectively manage AI risks and harness its potential for sustainable growth and development.

Comments are closed.